It’s hard to believe it’s only been a few years since generative AI tools started flooding the internet with low quality content-slop. Just over a year ago, you’d have to peruse certain corners of Facebook or spend time wading through the cultural cesspool of Elon Musk’s X to find people posting bizarre and repulsive synthetic media. Now, AI slop feels inescapable — whether you’re watching TV, reading the news, or trying to find a new apartment.

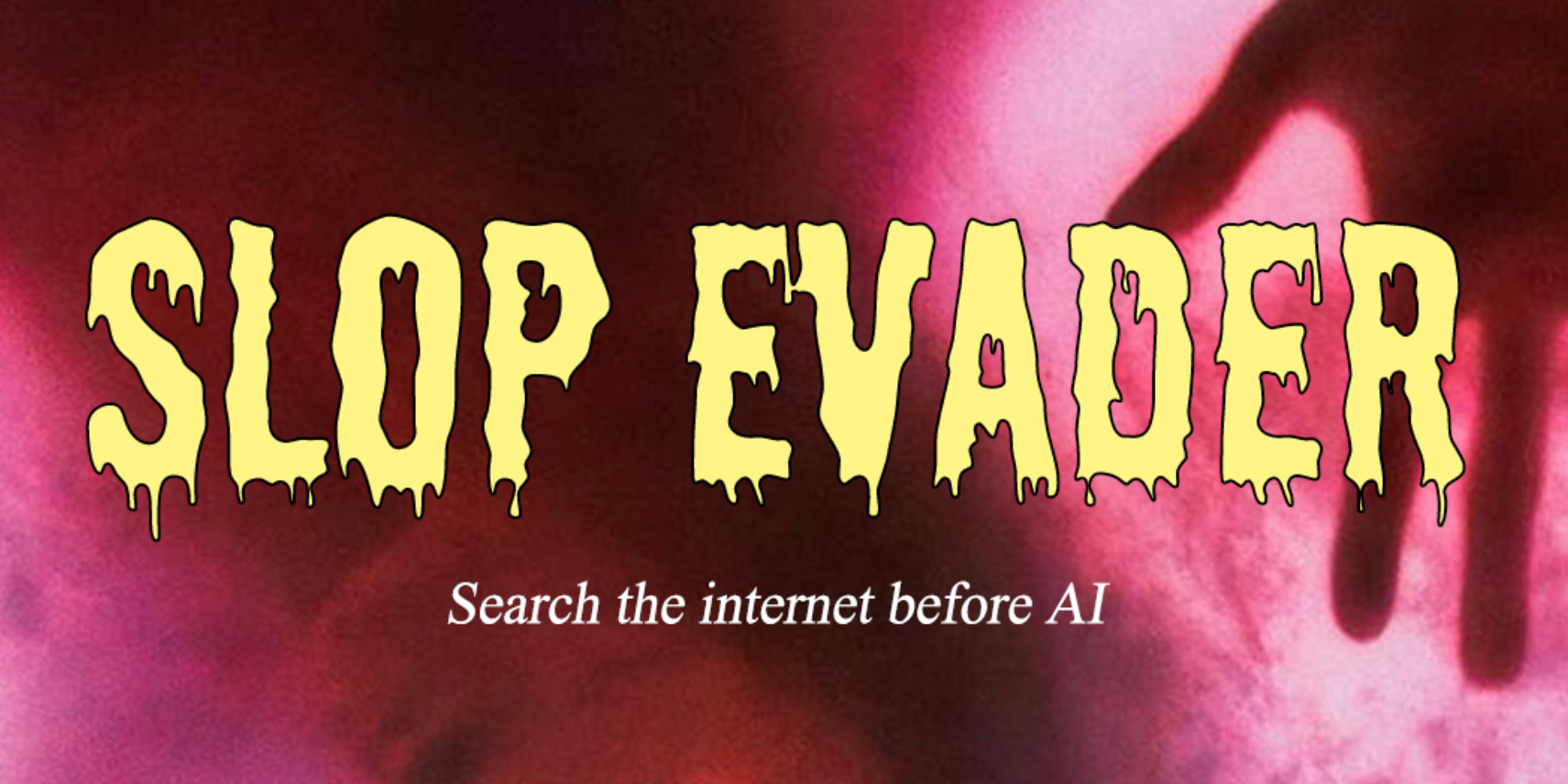

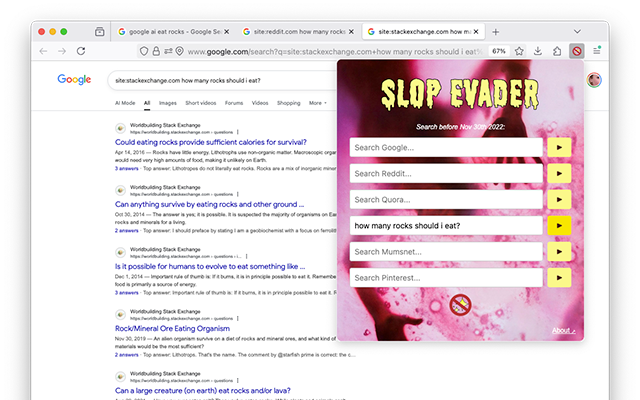

That is, unless you’re using Slop Evader, a new browser tool that filters your web searches to only include results from before November 30, 2022 — the day that ChatGPT was released to the public.

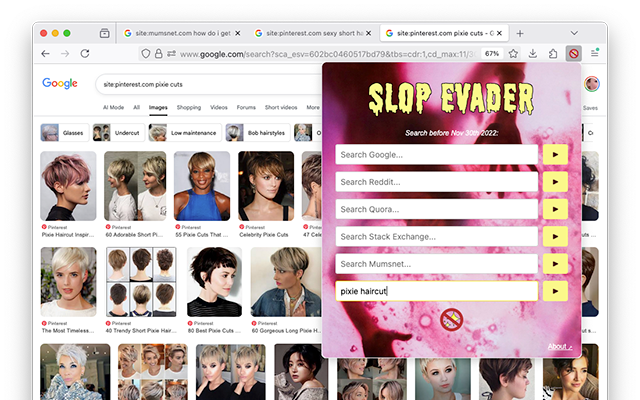

The tool is available for Firefox and Chrome, and has one simple function: Showing you the web as it was before the deluge of AI-generated garbage. It uses Google search functions to index popular websites and filter results based on publication date, a scorched earth approach that virtually guarantees your searches will be slop-free.

Slop Evader was created by artist and researcher Tega Brain, who says she was motivated by the growing dismay over the tech industry’s unrelenting, aggressive rollout of so-called “generative AI”—despite widespread criticism and the wider public’s distaste for it.

Slop Evader in action. Via Tega Brain

“This sowing of mistrust in our relationship with media is a huge thing, a huge effect of this synthetic media moment we’re in,” Brain told 404 Media, describing how tools like Sora 2 have short-circuited our ability to determine reality within a sea of artificial online junk. “I’ve been thinking about ways to refuse it, and the simplest, dumbest way to do that is to only search before 2022.”

One under-discussed impact of AI slop and synthetic media, says Brain, is how it increases our “cognitive load” when viewing anything online. When we can no longer immediately assume any of the media we encounter was made by a human, the act of using social media or browsing the web is transformed into a never-ending procession of existential double-takes.

This cognitive dissonance extends to everyday tasks that require us to use the internet—which is practically everything nowadays. Looking for a house or apartment? Companies are using genAI tools to generate pictures of houses and rental properties, as well as the ads themselves. Trying to sell your old junk on Facebook Marketplace? Meta’s embrace of generative AI means you may have to compete with bots, fake photos, and AI-generated listings. And when we shop for beauty products or view ads, synthetic media tools are taking our filtered and impossibly-idealized beauty standards to absurd and disturbing new places.

In all of these cases, generative AI tools further thumb the scales of power—saving companies money while placing a higher cognitive burden on regular people to determine what’s real and what’s not.

“I open up Pinterest and suddenly notice that half of my feed are these incredibly idealized faces of women that are clearly not real people,” said Brain. “It’s shoved into your face and into your feed, whether you searched for it or not.”

Currently, Slop Evader can be used to search pre-GPT archives of seven different sites where slop has become commonplace, including YouTube, Reddit, Stack Exchange, and the parenting site MumsNet. The obvious downside to this, from a user perspective, is that you won’t be able to find anything time-sensitive or current—including this very website, which did not exist in 2022. The experience is simultaneously refreshing and harrowing, allowing you to browse freely without having to constantly question reality, but always knowing that this freedom will be forever locked in time—nostalgia for a human-centric world wide web that no longer exists.

Of course, the tool’s limitations are part of its provocation. Brain says she has plans to add support for more sites, and release a new version that uses DuckDuckGo’s search indexing instead of Google’s. But the real goal, she says, is prompting people to question how they can collectively refuse the dystopian, inhuman version of the internet that Silicon Valley’s AI-pushers have forced on us.

“I don’t think browser add-ons are gonna save us,” said Brain. “For me, the purpose of doing this work is mostly to act as a provocation and give people examples of how you can refuse this stuff, to furnish one’s imaginary for what a politics of refusal could look like.”

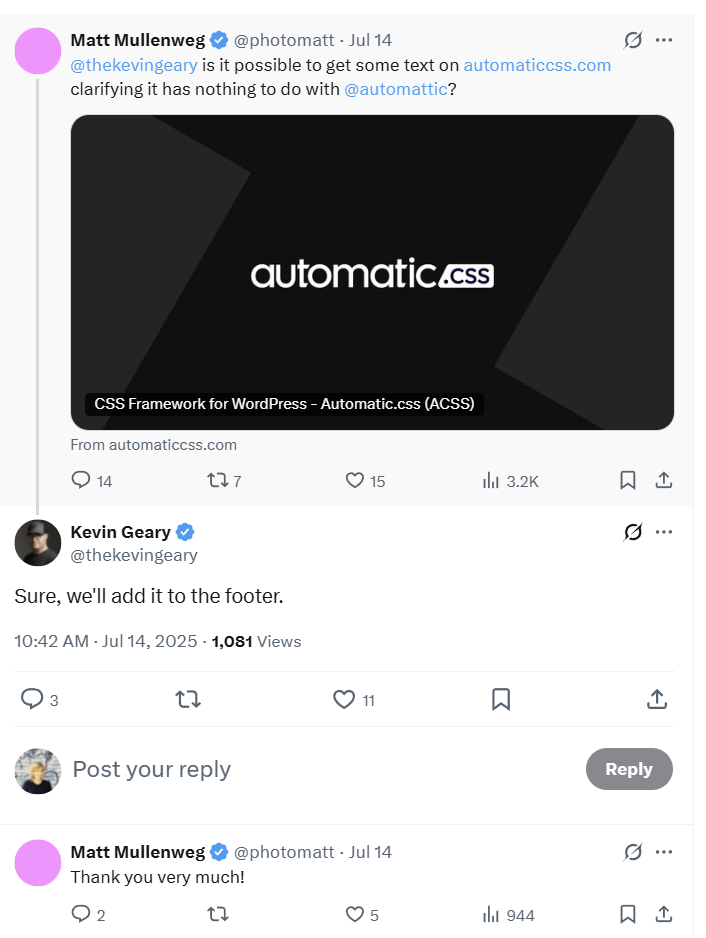

With enough cultural pushback, Brain suggests, we could start to see alternative search engines like DuckDuckGo adding options to filter out search results suspected of having synthetic content (DuckDuckGo added the ability to filter out AI images in search earlier this year). There’s also been a growing movement pushing back against the new AI data centers threatening to pollute communities and raise residents’ electricity bills. But no matter what form AI slop-refusal takes, it will need to be a group effort.

“It’s like with the climate debate, we’re not going to get out of this shitshow with individual actions alone,” she added. “I think that’s the million dollar question, is what is the relationship between this kind of individual empowerment work and collective pushback.”